My testimony concerns the selective science that underlies the existing federal determination of the Social Cost of Carbon and how a more inclusive and considered process would have resulted in a lower value for the social cost of carbon.

Back in 2015, the federal government’s Interagency Working Group (IWG) on the Social Cost of Carbon released a report that was a response to public comments of the IWG’s determination of the social cost of carbon that were solicited by the Office of Management and Budget in November 2013. Of the 140 unique sets of substantive comments received (including a set of my own), the IWG adopted none. And apart from some minor updates to its discussion on uncertainty, the IWG, in its most recent August 2016 report, retained the same, now obsolete, methodologies that were used in its initial 2010 SCC determination.

Here, I address why this decision was based on a set of flimsy, internally inconsistent excuses and amounts to a continuation of the IWG’s exclusion of the most relevant science—an exclusion which assures that low, or even negative values of the social cost of carbon (which would imply a net benefit of increased atmospheric carbon dioxide levels), do not find their way into cost/benefit analyses of proposed federal actions. If, in fact, the social cost of carbon were near zero, it would eliminate the justification for any federal action (greenhouse gas emissions regulations, ethanol mandates, miles per gallon standards, solar/wind subsidies, DoE efficiency regulations, etc.) geared towards reducing carbon dioxide emissions.

Equilibrium Climate Sensitivity

In May 2013, the Interagency Working Group produced an updated SCC value by incorporating revisions to the underlying three Integrated Assessment Models (IAMs) used by the IWG in its initial 2010 SCC determination. But, at that time, the IWG did not update the equilibrium climate sensitivity (ECS) employed in the IAMs. This was not done, despite, now, there having been, since January 1, 2011, at least 16 new studies and 32 experiments (involving more than 50 researchers) examining the ECS, each lowering the best estimate and tightening the error distribution about that estimate. Instead, the IWG wrote in its 2013 report: “It does not revisit other interagency modeling decisions (e.g., with regard to the discount rate, reference case socioeconomic and emission scenarios, or equilibrium climate sensitivity).”

This decision was reaffirmed by the IWG in July 2015 and again in its most recent August 2016 report. But, through its reaffirmation, the IWG has again refused to give credence to and recognize the importance of what is now becoming mainstream science—that the most likely value of the equilibrium climate sensitivity is lower than that used by the IWG and that the estimate is much better constrained. This situation has profound implications for the determination of the SCC and yet continues to be summarily dismissed by the IWG.

The earth’s equilibrium climate sensitivity is defined by the IWG in its 2010 report (hereafter, IWG2010) as “the long-term increase in the annual global-average surface temperature from a doubling of atmospheric CO2 concentration relative to pre-industrial levels (or stabilization at a concentration of approximately 550 parts per million (ppm))” and is recognized as “a key input parameter” for the integrated assessment models used to determine the social cost of carbon.

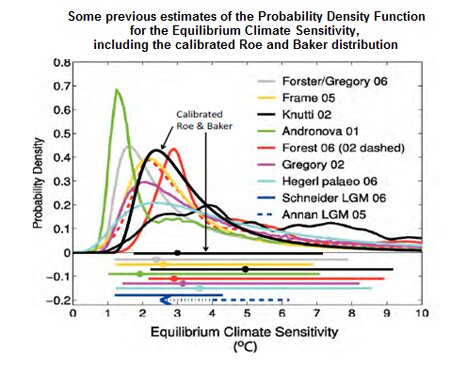

The IWG2010 report has an entire section (Section III.D) dedicated to describing how an estimate of the equilibrium climate sensitivity and the scientific uncertainties surrounding its actual value are developed and incorporated in the IWG’s analysis. The IWG2010, in fact, developed its own probability density function (pdf) for the ECS and used it in each of the three IAMs, superseding the ECS pdfs used by the original IAMs developers. The IWG’s intent was to develop an ECS pdf which most closely matched the description of the ECS as given in the Fourth Assessment Report of the United Nation’s Intergovernmental panel on Climate Change which was published in 2007.

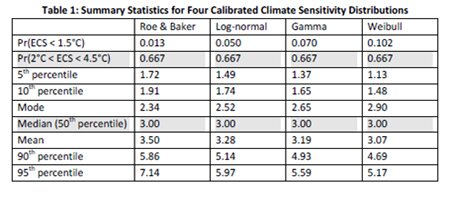

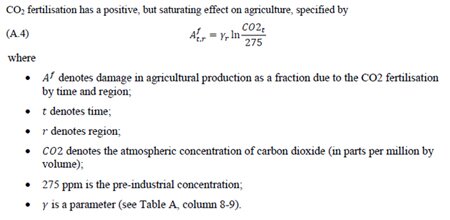

The functional form adopted by the IWG2010 was a calibrated version of the Roe and Baker (2007) distribution. It was described in the IWG2010 report in the following Table and Figure (from the IWG2010 report):

The calibrated Roe and Baker functional form used by the IWG2010 is no longer scientifically defensible; nor was it at the time of the publication of the IWG 2013 SCC update, nor at the time of the August 2016 update.

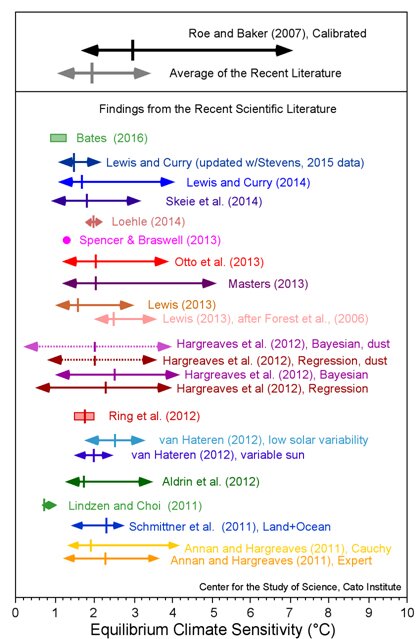

The figure below vividly illustrates this fact, as it compares the best estimate and 90% confidence range of the earth's ECS as used by the IWG (calibrated Roe and Baker) against findings in the scientific literature published since January 1, 2011.

Whereas the IWG ECS distribution has a median value of 3.0°C and 5th and 95th percentile values of 1.72°C and 7.14°C, respectively, the corresponding values averaged from the recent scientific literature are ~2.0°C (median), ~1.1°C (5th percentile), and ~3.5°C (95th percentile).

These differences will have large and significant impacts on the SCC determination.

CAPTION: The median (indicated by the small vertical line) and 90% confidence range (indicated by the horizontal line with arrowheads) of the climate sensitivity estimate used by the Interagency Working Group on the Social Cost of Carbon Climate (Roe and Baker, 2007) is indicated by the top black arrowed line. The average of the similar values from 22 different determinations reported in the recent scientific literature is given by the grey arrowed line (second line from the top). The sensitivity estimates from the 32 individual determinations of the ECS as reported in new research published after January 1, 2011 are indicated by the colored arrowed lines. The arrows indicate the 5 to 95% confidence bounds for each estimate along with the best estimate (median of each probability density function; or the mean of multiple estimates; colored vertical line). Ring et al. (2012) present four estimates of the climate sensitivity and the red box encompasses those estimates. Likewise, Bates (2016) presents eight estimates and the green box encompasses them. Spencer and Braswell (2013) produce a single ECS value best-matched to ocean heat content observations and internal radiative forcing.

In addition to recent studies aimed at directly determining the equilibrium climate sensitivity (included in the chart above), there have been several other major studies which have produced results which qualitatively suggest a climate sensitivity lower than mainstream (e.g. Roe and Baker calibration) estimates. Such studies include new insights on cloud condensation nuclei and cosmic rays (Kirkby et al., 2016), radiative forcing of clouds (Bellouin, 2016; Stevens, 2015), cloud processes (Mauritsen and Stevens, 2015) and the underestimation of terrestrial CO2 uptake (Sun et al., 2014).

The IWG2010 report noted that, concerning the low end of the ECS distribution, its determination reflected a greater degree of certainty that a low ECS value could be excluded than did the IPCC. From the IWG2010 (p. 14):

“Finally, we note the IPCC judgment that the equilibrium climate sensitivity “is very likely larger than 1.5°C.” Although the calibrated Roe & Baker distribution, for which the probability of equilibrium climate sensitivity being greater than 1.5°C is almost 99 percent, is not inconsistent with the IPCC definition of “very likely” as “greater than 90 percent probability,” it reflects a greater degree of certainty about very low values of ECS than was expressed by the IPCC.”

In other words, the IWG used its judgment that the lower bound of the ECS distribution was higher than the IPCC 2007 assessment indicated. However, the collection of the recent literature on the ECS shows the IWG’s judgment to be in error. As can be seen in the chart above, the large majority of the findings on ECS in the recent literature indicate that the lower bound (i.e., 5th percentile) of the ECS distribution is lower than the IPCC 2007 assessment. And, the average value of the 5th percentile in the recent literature (~1.1°C) is 0.62°C less than that used by the IWG—a sizeable and important difference which will influence the SCC determination.

In fact, the abundance of literature supporting a lower climate sensitivity was at least partially reflected in the new IPCC assessment report issued in 2013. In that report, the IPCC reported: